The high cost of free open-source generative software.

By Luke Arrigoni, Founder, Loti

The rise of deepfake technology poses significant risks to celebrities, high-net-worth individuals, and the general public, with its ability to manipulate reality, infringe on privacy, and facilitate crimes ranging from fraud to character assassination. This article delves into recent events highlighting these dangers, emphasizing the need for vigilance and protective measures.

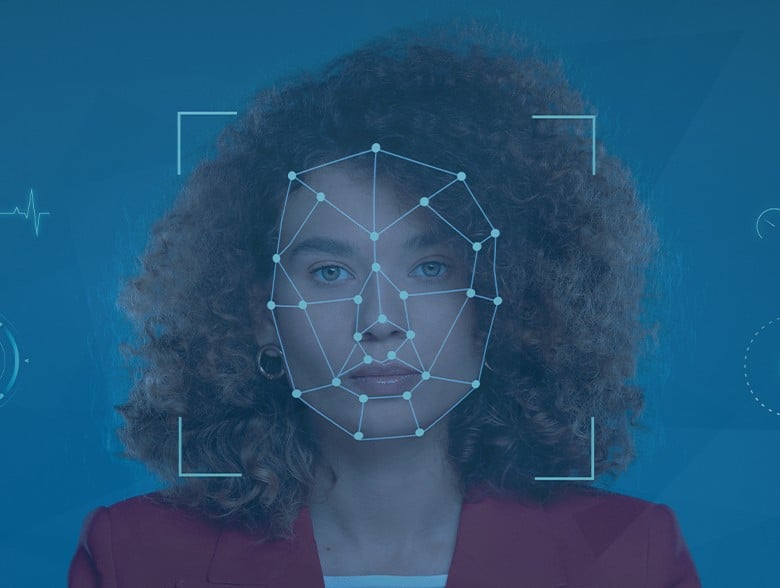

In an age where technology is advancing at an unprecedented pace, the emergence of deepfake technology, such as stable diffusion software, poses a profound and unsettling threat. This software, once the domain of specialized experts, has now become alarmingly accessible to the general public, raising serious concerns about privacy, security, and the integrity of digital content.

The disconcerting ease with which stable diffusion software can be downloaded and operated has opened a Pandora’s box of potential misuse. With just a few clicks, an individual with minimal technical skills can access these tools, capable of generating hyper-realistic deepfakes. This software, leveraging sophisticated artificial intelligence algorithms, can manipulate images and videos to an extent that the fabricated content appears strikingly real, blurring the lines between truth and deception.

This ease of access dramatically lowers the barrier to entry for creating deepfakes, democratizing a technology that was once restricted to those with substantial computational resources and technical expertise. Now, anyone with a basic computer and internet access can harness the power of stable diffusion software. This shift has profound implications for personal privacy and security. It raises alarming questions about the potential for misuse, particularly against public figures, celebrities, and high-net-worth individuals who are often the targets of such malicious activities.

The growing availability of this technology has been met with widespread concern from cybersecurity experts, legal professionals, and ethicists. They warn of a future where the authenticity of digital content can no longer be taken for granted, a world where seeing is no longer believing. The potential for harm is vast – from damaging the reputations of individuals through fabricated scandalous content to influencing public opinion through false representations of political figures.

As these tools become more user-friendly and accessible, the challenges in combating deepfake content grow exponentially. Traditional methods of verification are becoming less effective against the advancing capabilities of deepfake technology. This creates a pressing need for new solutions to detect and combat these digital deceptions.

Recent Incidents Involving Celebrities

- Rashmika Mandanna Incident: A deepfake video that appeared to show Indian actress Rashmika Mandanna in an elevator, which was later revealed to be a fabrication using the body of British-Indian influencer Zara Patel, caused widespread concern. This incident underscored the ease with which AI can manipulate images and the severe impact it can have on the victims, leading to distress and damage to reputation

- Katrina Kaif Deepfake: Another troubling case involved Bollywood actress Katrina Kaif. An original scene from her movie “Tiger 3” was morphed to present her inappropriately, causing public outrage. This example not only illustrates the ease of creating convincing deepfakes but also their potential to harm the dignity and public image of individuals

Impact on High Net Worth Individuals and Businesses

- Rapid Increase in Deepfakes: The number of deepfake videos online has surged at an alarming annual rate of 900%, according to the World Economic Forum. This increase has led to a rise in cases involving harassment, revenge, crypto scams, and more, signaling a growing threat that can affect anyone, particularly those in the public eye or with substantial assets

- Elon Musk Impersonation: In a notable example, scammers used a deepfake video of Elon Musk to promote a fraudulent cryptocurrency scheme, resulting in significant financial losses for those duped by the scam. This incident highlights the potential for deepfakes to be used in sophisticated financial frauds targeting unsuspecting investors

- Violation of Privacy: The use of deepfakes in violating individual privacy is a significant concern. For instance, deepfake videos have been created showing celebrities’ faces superimposed onto pornographic content, causing considerable distress and reputational damage

- Targeting Businesses: Deepfakes pose a serious threat to businesses as well, with instances of extortion, blackmail, and industrial espionage. A notable case involved cybercriminals deceiving a bank manager in the UAE using a voice deepfake, leading to a $35 million theft. In another instance, scammers attempted to fool Binance, a large cryptocurrency platform, using a deepfake in an online meeting

The Financial Sector’s Struggle

The financial sector, including banks and FinTech companies, is increasingly targeted by deepfake scams. These scams exploit vulnerabilities in digital systems and the trust of individuals, leading to significant financial losses and a loss of consumer confidence. Banks are now investing in defensive technology and educating consumers about these risks

Conclusion: The Need for Protective Measures

The incidents mentioned above underscore the urgent need for protective measures against deepfake technology. This is where services like Loti come into play, offering tools to detect and combat unauthorized use of a person’s image or voice. For celebrities, high-net-worth individuals, and businesses, employing such protective measures is not just about safeguarding their privacy and reputation but also about defending against potential financial and emotional harm.

In conclusion, while deepfake technology continues to evolve and pose new challenges, proactive steps and awareness can help mitigate its risks. Companies like Loti offer a valuable resource in this ongoing battle, helping to ensure that personal and professional integrity remains intact in the digital age.

About the Author

Luke Arrigoni is an expert in artificial intelligence with a rich history of collaboration with leading U.S. companies like UPS, J&J, Getty, AT&T, Goldman Sachs, CAA, and Sephora. He’s built robust machine learning and data science programs. His visionary work predates the formal recognition of such technologies in the industry. Luke’s entrepreneurial journey includes successfully building and selling an econometrics business, showcasing his acumen in creating and scaling innovative tech ventures. Currently, as the Co-Founder of Loti (https://goloti.com), Arrigoni leads in digital identity protection, leveraging advanced AI to monitor and control personal image and video dissemination online, including takedowns of unauthorized content and deep fakes.

Luke Arrigoni is an expert in artificial intelligence with a rich history of collaboration with leading U.S. companies like UPS, J&J, Getty, AT&T, Goldman Sachs, CAA, and Sephora. He’s built robust machine learning and data science programs. His visionary work predates the formal recognition of such technologies in the industry. Luke’s entrepreneurial journey includes successfully building and selling an econometrics business, showcasing his acumen in creating and scaling innovative tech ventures. Currently, as the Co-Founder of Loti (https://goloti.com), Arrigoni leads in digital identity protection, leveraging advanced AI to monitor and control personal image and video dissemination online, including takedowns of unauthorized content and deep fakes.