Why Companies Need More Than Technical Indicators to Identify Their Biggest Threats Before They Do Harm

By David A. Sanders, Director of Insider Threat Operations, Haystax

Most corporate insider threat programs are structured and equipped to mitigate adverse events perpetrated by trusted insiders only after they have occurred. But proactive insider risk management is possible – and it starts with a robust approach to detection.

Consider this scenario, based on a real-life case, in which a concerning insider threat event turns out to be more complicated than expected:

John commented to other employees that it would be easy to take down the new cloud services his company recently migrated to from their on-premises systems. The employees reported the comment to their manager, who reported it to human resources and ultimately the company’s insider threat program. An investigation revealed that John was angry because his role had changed with the new architecture. In addition, he was clinically depressed, off medication, and had suicidal thoughts. The investigative results prompted a coordinated response among the insider threat program, security, legal and human resources. The threat was mitigated, with the final step of referring John to the employee assistance program.

Because the insider threat team was notified about one behavioral indicator of a high-impact event, additional indicators were gathered and assessed to determine that John was a potential threat to the company and to himself. In doing so, the company was able to intervene and proactively mitigate an insider threat event before it occurred. The resulting cost and impact were minimal. By contrast, the projected cost and impact of the cloud services being taken off-line for one day were very high.

It is impossible to know whether John would have committed an act of sabotage or self-harm, but the mitigation efforts nevertheless reduced the chances and allowed John to remain employed and productive.

Without a proactive response, the alternative is to detect and respond to an event after it occurs, incurring the cost of the impact then attempting to minimize the effect.

The Path to Proactive Risk Mitigation

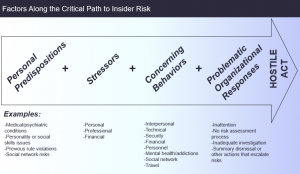

Eric Shaw and Laura Sellers created the ‘Critical Path to Insider Risk’ in 2015, after studying insider threat cases in the U.S. intelligence community and at the Department of Defense. They concluded that perpetrators exhibit observable indicators prior to their acts. This concept is represented in the graphic below.

Source: Eric Shaw and Laura Sellers (2015) “Application of the Critical-Path Method to Evaluate Insider Risks,” Studies in Intelligence, Volume 59, Number 2, June, pages 41-48. The Central Intelligence Agency, Washington, DC.

The practical application of these findings is that knowledge of ‘personal predispositions’ and behavioral indicators can inform the judgment of experts to determine whether an insider is on the path to becoming a risk.

Based on that judgment, a measured and effective response can be planned to assess the risk through preliminary assessments – and perhaps a complete investigation, if warranted. The goal is to mitigate or prevent the insider risk event by engaging with the potential threat early. This is precisely what occurred in John’s case. The company responded effectively to ‘turn John around’ and prevent potentially hostile and harmful acts from occurring.

Technical and Non-Technical Risk Indicators

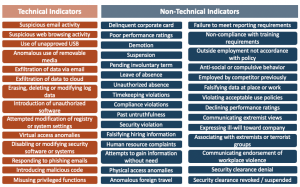

The Defense Counterintelligence and Security Agency (DCSA) Center for Development of Security Excellence published a list of potential risk indicators, which are categorized below into ‘Technical Indicators’ and ‘Non-Technical Indicators.’ Technical indicators can be detected by monitoring and analyzing computer and network activities. Non-technical indicators typically occur off the computer and network and therefore cannot be detected on those systems.

Insider threat potential risk indicators categorized by whether or not they can be commonly detected by monitoring computer and network activity.

While the average enterprise insider threat program might not share the same objectives as DCSA, the agency’s human-centric view of the challenge is instructive to companies because the cause of insider threat problems is, by definition, known individuals associated with and managed by the organization.

Effort and resources allocated to the gathering, integrating, and analyzing non-technical indicators to better know those individuals can improve the effectiveness of programs that mostly rely on technical indicators to prioritize higher-risk employees. In this regard, non-technical indicators help programs to get ahead of insider threat problems, rather than simply react to them.

Using Non-Technical Risk Indicators

Non-technical indicators are available within most company systems. For example, human resource information systems will contain data about promotions, demotions, suspensions, performance ratings, training records, and previous employers. Security information systems may have records of violations, anomalous attempts to gain access to unauthorized areas, and, in the case of the defense and aerospace industry, security-clearance denials.

Facilitating the identification and reporting of additional kinds of non-technical behaviors can be more challenging. For example, ‘See Something, Say Something” programs have limited utility for multiple reasons. First, co-workers often do not consciously recognize the indicators until they are significant or until something bad happens. Second, if they do recognize a concern, they rarely report it because they do not see it as significant, or they do not want to get someone they like in trouble.

To overcome these challenges, insider threat programs need to repeatedly communicate that the goal of the program is to mitigate risks in a proactive and positive manner, helping employees while protecting company assets. As this goal is accomplished, stakeholders, supervisors, and employees will take notice, which will increase compliance and participation in the reporting program.

Next, insider threat programs need to facilitate the reporting of anomalous activity by supervisors. This can be accomplished via direct conversations, indirectly through human resources, or by using surveys. The results of this reporting should then inform the insider threat program threat detection capability.

Temporal Analysis

The importance of integrating and analyzing indicators over time cannot be overstated. Let’s consider a fictitious scenario where there are non-technical behavioral indicators that increase the threat level of an employee:

Jolene has been with her company for three years. Initially, she was a good performer but that has changed over the past two years. She has grown increasingly unhappy with her job as a database administrator and her personal life is in shambles. She finds her role trivial and she feels the company is not treating her fairly compared to others, which she has expressed to human resources. She applied for a position in another department but was not selected, which made her even more angry and frustrated. She has access to mission-critical systems with authorization to create and destroy databases, tables, and records. Her supervisor works from another office location and does not meet with her more than once every two weeks. Outside of work, Jolene barely has enough money to pay rent for a two-bedroom apartment since her boyfriend left town. Moreover, she recently wrecked her truck and her cat is sick again. She is not sleeping well and has turned to drugs and alcohol.

Jolene has moved far along the critical path to insider risk. She has multiple stressors, exhibits concerning behaviors, and has experienced problematic organizational responses. And she has access to critical company systems.

It would be wise to fully evaluate then mitigate any risk that Jolene presents, with the goal of protecting company assets and assisting a struggling employee. Yet very few companies have the capability to assemble and analyze this non-technical information to effectively identify when an insider like Jolene is on the path to insider risk. Assessing employees’ private lives through background or credit checks or other measures is not even necessary in most cases; many other indicators are already collected by the organization and readily available.

The inadequate use of non-technical indicators might be due to the fact that many insider threat programs grow out of existing cybersecurity programs using management tools such as UEBA and SIEM, which were developed to evaluate large volumes of technical data using rules and machine learning to identify technical behavioral anomalies.

As discussed above, when looking at insider threats as caused by known humans, these technical indicators are perhaps one-third of the picture. Risk-scoring models built solely around technical indicators are not designed to put the anomalies that they detect into the broader context of the critical path to insider risk. These models can only be effective if they add non-technical behavioral indicators to the analytical mix.

Multi-Disciplinary Technology Platforms for Evaluating Insider Threats

Insider threat programs should consist of diverse experts representing human resources, legal, information security, cybersecurity, information technology, physical security, behavioral science, and counterintelligence. These disciplines bring data and perspective when evaluating insider threats. They weigh the evidence and give opinions on whether the behavior is indicative of a threat.

The problem is that this approach does not scale well in organizations with large numbers of employees since no team of experts could keep up.

But the experts can share their judgments and wisdom in analytic tools that apply complex reasoning that goes into contextualized analysis of insider threats. For this approach, Bayesian inference networks are an ideal solution.

Bayesian networks can be built to probabilistically model expert reasoning across multiple domains using the full range of technical and non-technical behavioral indicators of insider risk. The result is a vastly improved capability to identify high-risk insiders that have committed threat activities, as well as those who are on the Critical Path to potentially commit them in the future. The probabilistic model enables the desired proactive response necessary to protect company assets, including the insiders themselves.

About the Author

David Sanders is Director of Insider Threat Operations at Haystax, a business unit of Fishtech Group. Previously, he designed and managed the insider threat program at Harris Corporation, now L3Harris Technologies. David also served on the U.S. government’s National Insider Threat Task Force (NITTF). David can be reached online at ([email protected] or https://www.linkedin.com/in/david-sanders-haystax/) and at our company website http://www.haystax.com/

David Sanders is Director of Insider Threat Operations at Haystax, a business unit of Fishtech Group. Previously, he designed and managed the insider threat program at Harris Corporation, now L3Harris Technologies. David also served on the U.S. government’s National Insider Threat Task Force (NITTF). David can be reached online at ([email protected] or https://www.linkedin.com/in/david-sanders-haystax/) and at our company website http://www.haystax.com/